IADR Abstract Archives

Students Evaluation of Systematic Reviews Using the AMSTAR Tool

Objectives: It has been proposed that dental education should empower graduates to continuously adapt to evolving new evidence. In 2007, the development of an assessment tool for the quality of systematic reviews (AMSTAR) was reported, consisting of a validated 11 item questionnaire.

The purpose of this abstract is to describe how junior dental students apply the AMSTAR tool to evaluate systematic reviews.

Methods: Junior students received a lecture in which an systematic review was appraised with AMSTAR. Subsequently, students had to independently evaluate the quality of an unseen article. The time allocated for the exam was 50 minutes.

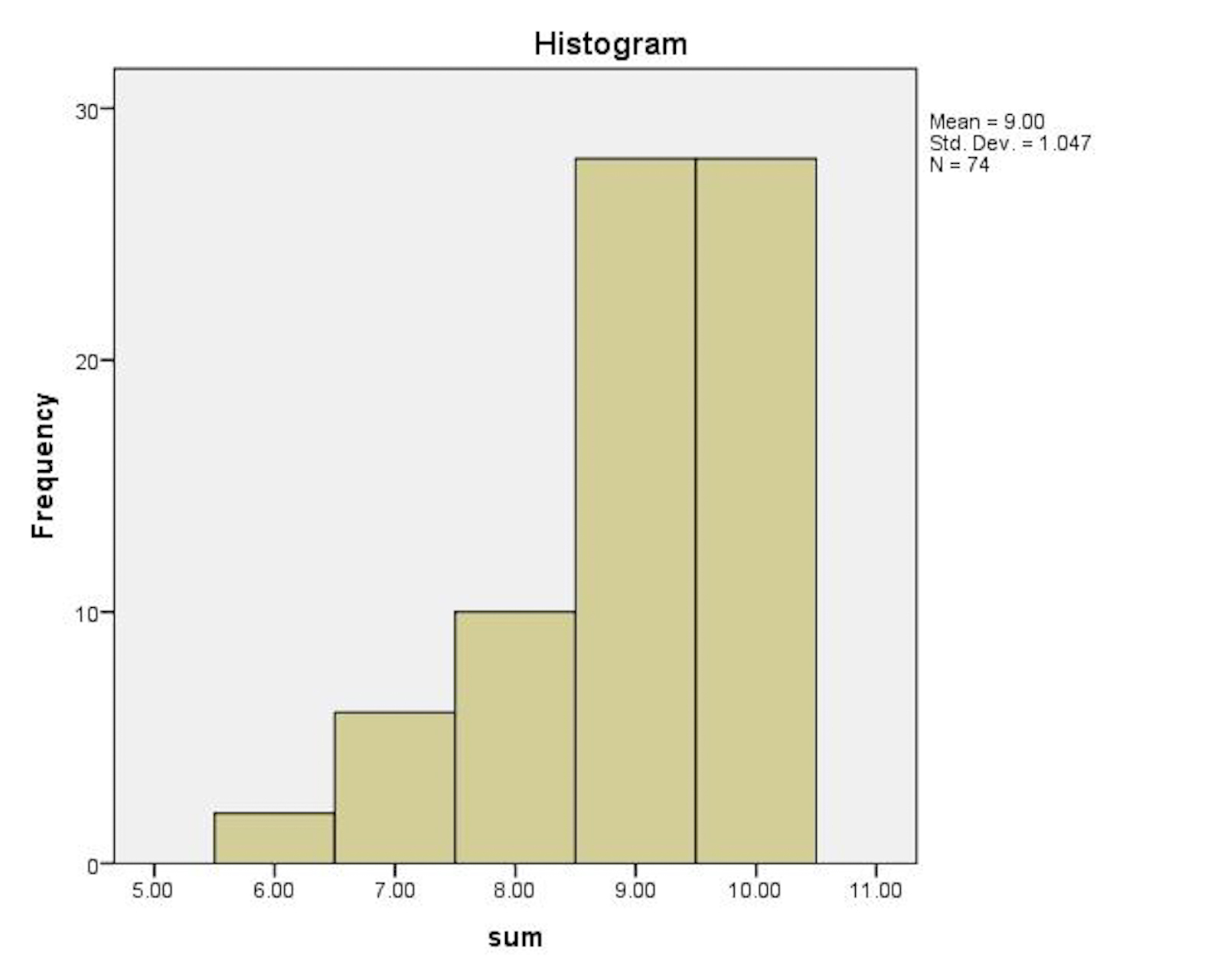

Results: Seventy four junior students participated and 100% answered all AMSTAR questions. The frequency of answers is presented in Table 1. The mean number of correct answers was 9 (SD = 1.047, Min = 6, Max = 10) (Fig 1).

Spearman’s nonparametric correlation analysis revealed statistically significant correlation between questions 7 and 8. Cross tabulation of these responses (Fisher Exact Test p<0.001, Table 2) shows that answers to these questions are related. This result was expected because these questions are related by design (Table 3).

Conclusions: The pattern of answers to Q9 denotes that the construct of the question (Table 3) may be questionable because the students face multiple levels of decision: first, if results were combined meta-analytically one has to decide if the combining methodology was appropriate (possible answers Yes or No); second, if the systematic review did not combine results the correct answer will be “Not Applicable”.

AMSTAR is a tool that potentially will help students developing competency in appraisal of systematic reviews. It is important however to provide extensive training in regards to how AMSTAR questions should be applied during the literature evaluation. Special emphasis should be directed towards determination and evaluation of methodologies used to combine results in meta-analyses.

The purpose of this abstract is to describe how junior dental students apply the AMSTAR tool to evaluate systematic reviews.

Methods: Junior students received a lecture in which an systematic review was appraised with AMSTAR. Subsequently, students had to independently evaluate the quality of an unseen article. The time allocated for the exam was 50 minutes.

Results: Seventy four junior students participated and 100% answered all AMSTAR questions. The frequency of answers is presented in Table 1. The mean number of correct answers was 9 (SD = 1.047, Min = 6, Max = 10) (Fig 1).

Spearman’s nonparametric correlation analysis revealed statistically significant correlation between questions 7 and 8. Cross tabulation of these responses (Fisher Exact Test p<0.001, Table 2) shows that answers to these questions are related. This result was expected because these questions are related by design (Table 3).

Conclusions: The pattern of answers to Q9 denotes that the construct of the question (Table 3) may be questionable because the students face multiple levels of decision: first, if results were combined meta-analytically one has to decide if the combining methodology was appropriate (possible answers Yes or No); second, if the systematic review did not combine results the correct answer will be “Not Applicable”.

AMSTAR is a tool that potentially will help students developing competency in appraisal of systematic reviews. It is important however to provide extensive training in regards to how AMSTAR questions should be applied during the literature evaluation. Special emphasis should be directed towards determination and evaluation of methodologies used to combine results in meta-analyses.